In today’s fast-paced tech world, DevOps engineers play an important role in connecting development and operations. But what makes them special? One key skill is their ability to use scripting to automate and simplify workflows, making everyday tasks faster and easier. This guide will take you through the basics all the way to advanced scripting in DevOps, giving you the tools you need, no matter your experience level.

What is Scripting in DevOps?

Scripting means writing a set of instructions that a computer can follow automatically. In DevOps, scripting helps create “automated helpers” to handle repetitive tasks, set up environments, and manage infrastructure. Instead of doing tasks by hand, scripts do the work for you, which means fewer mistakes and more time to focus on important things.

Scripts are usually lightweight programs written in scripting languages like Bash, Python, or PowerShell that interact with your operating system, cloud environments, or tools like Docker and Kubernetes. Scripting is a key part of automation in DevOps, helping teams achieve consistent, efficient, and reliable results.

Scripting allows engineers to turn manual, time-consuming processes into automated tasks, reducing the need for human intervention and lowering the risk of errors. With scripting, teams can speed up processes like building code, deploying applications, and monitoring systems. Whether it’s setting up cloud resources, managing configurations, or deploying containers, scripting is crucial for creating efficient and repeatable workflows.

Suggested Image: A simple flowchart showing how DevOps uses automation in stages like planning, building, testing, and deploying.

Placement: After this paragraph to help visualize the idea of automation in DevOps.

Why is Scripting Important in DevOps?

Automation is at the heart of DevOps, and scripting is the tool that makes automation possible. By using scripts, DevOps teams can create repeatable processes, making sure every action is done the same way every time. This consistency helps avoid human mistakes.

Think of tasks like building and deploying software—without scripts, these steps would be slow and prone to errors. Scripts not only save time but also make sure the process is always done correctly, even when projects get bigger and more complex.

Scripting allows DevOps engineers to automate tasks like:

- Automating Builds and Deployments: Scripts can automate the process of building, testing, and deploying code, reducing manual work and making everything faster.

- Configuration Management: Scripting helps set up environments the same way every time, so there are no differences between development, testing, and production.

- Infrastructure Provisioning: With Infrastructure as Code (IaC), scripts are used to set up and manage cloud infrastructure, making it easy to create environments again and again.

- Monitoring and Alerting: Scripts can automate monitoring, collect data, and send alerts when something goes wrong, making sure problems are handled quickly.

Scripting is also useful for managing complex workflows. For example, deploying a new version of an application might involve many steps, like stopping services, updating settings, testing, and restarting services. Scripts help automate all these steps, making sure they happen in the right order and without mistakes.

Scripts also make it possible to integrate different tools and processes seamlessly. Imagine having to manually connect multiple tools, such as version control systems, testing tools, deployment servers, and monitoring systems. This would take a lot of time and effort, and it could easily lead to mistakes. With scripting, all these tools can be connected in a smooth, automated workflow that runs reliably every time.

Popular Scripting Languages in DevOps

Different scripting languages have their strengths and use cases. Here are some of the most popular ones used in DevOps:

- Bash: Best for Unix/Linux-based systems. Bash is great for automating tasks and shell commands in Linux environments. It is often used for managing servers, working with files, and automating tasks in Linux.

# A simple Bash script to deploy an application

#!/bin/bash

echo "Starting deployment..."

git pull origin main

docker-compose up -d

echo "Deployment complete!"Bash scripts are ideal for automating server setup, deployments, and basic system tasks. They are easy to write and require minimal resources, making them a go-to solution for quick automation.

- Python: Great for automation, managing cloud resources, and working across platforms. Python is versatile and widely used for tasks like cloud automation and working with AWS, Azure, and Google Cloud. Its easy-to-read syntax and many libraries make it perfect for managing cloud resources and creating flexible scripts.

import boto3

ec2 = boto3.client('ec2')

def create_instance():

ec2.run_instances(

ImageId='ami-0abcdef1234567890',

InstanceType='t2.micro',

MinCount=1,

MaxCount=1

)

print("EC2 instance created!")

create_instance()Python is widely used in DevOps because it has a lot of libraries that make automating tasks easier. It is great for writing more complex scripts that involve logic, working with APIs, or managing cloud services.

- PowerShell: Best for Windows-based systems. PowerShell is a powerful tool for automating tasks in Windows environments and for managing Azure. Its combination of command-line and scripting features makes it perfect for managing Windows servers, Active Directory, and cloud infrastructure.

# Restart a service in Windows

Restart-Service -Name "Spooler"PowerShell is often used by IT admins for managing Microsoft environments. It helps in automating server tasks, managing configurations, and handling various aspects of the Windows operating system.

- Ruby: Often used for configuration management with tools like Chef and Puppet. Ruby has an easy-to-read syntax and is used for managing server configurations and automating infrastructure.

execute 'update-upgrade' do

command 'apt-get update && apt-get upgrade -y'

endRuby is particularly useful for infrastructure automation and is often used to create reusable modules that make setting up and managing servers easier.

Suggested Image: A comparison table showing the strengths of Bash, Python, PowerShell, and Ruby.

Placement: After this section to give a visual overview.

Getting Started with Scripting: Basics for Beginners

If you are new to scripting, it might seem scary at first. But starting with the basics will help you get comfortable quickly. Here’s a simple example of a script written in Bash to do a basic task:

#!/bin/bash

# This script prints a welcome message

echo "Hello, DevOps! Let's automate everything!"Save this script as welcome.sh, make it executable with chmod +x welcome.sh, and run it with ./welcome.sh. You’ve just automated your first task!

Key Concepts to Learn

- Variables: Store and reuse data in your scripts to make them dynamic.

- Control Structures: Use loops and conditions to control the flow of your script, letting it make decisions and repeat actions.

- Functions: Break your code into reusable parts that make your scripts easier to read and maintain.

Learning these concepts will help you write scripts that are more useful, easier to understand, and easier to maintain.

Examples of Bash Scripts

- Loop Example: Loop through a list of server names and run a command on each one.

#!/bin/bash

servers=(server1 server2 server3)

for server in "${servers[@]}"

do

echo "Pinging $server..."

ping -c 1 $server

doneThis loop script helps automate tasks on multiple servers. For example, you could use it to check the health of all your servers or deploy updates.

- Conditional Example: Check if a directory exists and create it if it doesn’t.

#!/bin/bash

dir="/path/to/directory"

if [ ! -d "$dir" ]; then

echo "Directory does not exist. Creating..."

mkdir -p "$dir"

else

echo "Directory already exists."

fiThis script uses a conditional statement to check if a directory exists. If it doesn’t, the script creates it, which helps in maintaining consistency when setting up environments.

Intermediate Scripting: Automating Workflows

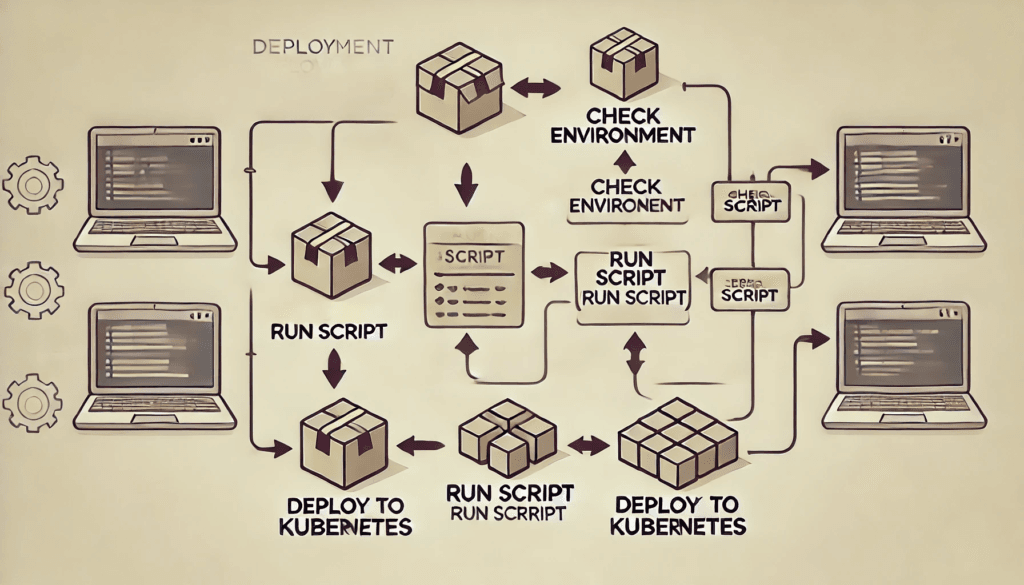

Once you know the basics, it’s time to put scripting into action. Automating workflows is important for making sure that repetitive tasks are done reliably and consistently. Here’s a Python script to automate part of a CI/CD pipeline.

Python Script Example for Deployment Automation

import subprocess

def deploy_app(environment):

if environment not in ['staging', 'production']:

raise ValueError("Invalid environment! Choose 'staging' or 'production'.")

deploy_command = f"kubectl apply -f deployment_{environment}.yaml"

subprocess.run(deploy_command, shell=True)

print(f"Deployment to {environment} completed successfully!")

deploy_app('staging')This script automates deployments using Kubernetes. It checks if the environment is valid and then applies the right settings, reducing manual errors and speeding up deployment.

This type of automation is very helpful when managing multiple environments, like staging and production. Instead of manually changing settings or running commands for each environment, you can use a script to automate the process.

Additional Python Examples

- Automate File Backup: Use Python to back up files from one directory to another to make sure data is safe.

import os

import shutil

source = '/path/to/source'

destination = '/path/to/destination'

def backup_files():

for filename in os.listdir(source):

full_file_name = os.path.join(source, filename)

if os.path.isfile(full_file_name):

shutil.copy(full_file_name, destination)

print("Backup completed!")

backup_files()Automating file backups helps ensure that important data is regularly copied and saved, reducing the risk of data loss.

- Send Notification Email: Automate sending an email after a successful deployment, which can help keep the team updated.

import smtplib

from email.mime.text import MIMEText

def send_notification():

msg = MIMEText("Deployment completed successfully.")

msg['Subject'] = "Deployment Status"

msg['From'] = "your-email@example.com"

msg['To'] = "recipient@example.com"

with smtplib.SMTP('smtp.example.com') as server:

server.login("your-email@example.com", "your-password")

server.sendmail(msg['From'], [msg['To']], msg.as_string())

send_notification()Creating a Bucket using Terraform and CloudFormation

Infrastructure as Code (IaC) is a fundamental concept in DevOps that helps automate and manage infrastructure using code. Below are examples of how to create an Amazon S3 bucket using Terraform and AWS CloudFormation.

Creating an S3 Bucket using Terraform

Terraform is a popular tool for defining and provisioning infrastructure in a cloud environment. Here is an example Terraform configuration to create an S3 bucket:

provider "aws" {

region = "us-east-1"

}

resource "aws_s3_bucket" "my_bucket" {

bucket = "my-devops-terraform-bucket"

acl = "private"

versioning {

enabled = true

}

lifecycle_rule {

enabled = true

transition {

days = 30

storage_class = "STANDARD_IA"

}

}

}Steps to Execute:

- Save the configuration as

main.tf. - Run

terraform initto initialize the directory containing the Terraform configuration. - Run

terraform applyto create the S3 bucket.

Creating an S3 Bucket using CloudFormation

AWS CloudFormation allows you to define and manage AWS resources using templates. Here is an example CloudFormation template to create an S3 bucket:

Resources:

MyS3Bucket:

Type: "AWS::S3::Bucket"

Properties:

BucketName: "my-devops-cloudformation-bucket"

VersioningConfiguration:

Status: "Enabled"

LifecycleConfiguration:

Rules:

- Status: "Enabled"

Transitions:

- StorageClass: "STANDARD_IA"

TransitionInDays: 30Steps to Deploy:

- Save the template as

s3_bucket.yaml. - Use the AWS CLI to create the stack:

aws cloudformation create-stack --stack-name my-s3-stack --template-body file://s3_bucket.yaml- Verify the stack creation by using the command:

aws cloudformation describe-stacks --stack-name my-s3-stackAutomation Tools in DevOps

Automation is one of the key principles of DevOps. By automating tedious and repetitive tasks, DevOps teams can save time, reduce errors, and improve overall efficiency. Below are some of the most popular tools and techniques for mastering automation in DevOps.

Continuous Integration Tools

Continuous Integration (CI) is a key component of the DevOps workflow. CI tools automatically build and test code changes as soon as they are committed to the repository. This ensures that any issues are caught early on, before they become bigger problems. Some popular CI tools include:

- Jenkins

- Travis CI

- CircleCI

- GitLab CI

Configuration Management Tools

Configuration Management (CM) tools allow DevOps teams to automate the configuration of servers and other infrastructure components. With CM tools, teams can define configurations as code, making it easy to test and deploy changes. Some popular CM tools include:

- Ansible

- Puppet

- Chef

- SaltStack

Infrastructure as Code (IaC) Tools

Infrastructure as Code (IaC) is the practice of defining infrastructure components as code. IaC tools allow DevOps teams to manage infrastructure in a more repeatable and scalable way. Some popular IaC tools include:

- Terraform

- CloudFormation

- Azure Resource Manager

- Google Cloud Deployment Manager

Orchestration Tools

Orchestration tools allow DevOps teams to manage complex workflows involving multiple components and services. With orchestration tools, teams can define workflows as code, making it easy to automate and scale processes. Some popular orchestration tools include:

- Kubernetes

- Docker Swarm

- Mesos

- Nomad

Conclusion

Mastering scripting and automation is essential for success in DevOps. By leveraging the right tools and techniques, teams can streamline their workflows, improve their efficiency, and deliver higher-quality software. From using popular scripting languages like Bash, Python, and PowerShell to adopting advanced tools like Terraform, Kubernetes, and Jenkins, automation makes DevOps processes more effective and reliable. If you’re new to automation in DevOps, start by exploring some of the tools and techniques outlined in this article. With practice and experience, you’ll soon become a master of automation, capable of tackling even the most challenging workflows and environments.