The AI Power Bill Is Now a Product Decision: An Operator’s Playbook for Cost, Speed, and Reliability In October 2025, a Reddit thread in r/technology exploded around a Bloomberg headline: “AI Data Centers Are Skyrocketing Regular People’s Energy Bills.” The post itself was simple, but the comment section wasn’t. Engineers, …

The Real AI Employment Shift: Why Entry-Level Work Is Shrinking and What Teams Must Build Next

The Real AI Employment Shift: Why Entry-Level Work Is Shrinking and What Teams Must Build Next A Reddit thread in r/technology this week hit a nerve: a report claiming AI is cutting entry-level openings in coding and customer service. The post spread because it matches what many operators are seeing …

The New AI Operations Playbook: What Reddit Practitioners Get Right About Cost, Quality, and Control

The New AI Operations Playbook: What Reddit Practitioners Get Right About Cost, Quality, and Control For most of 2024, the dominant AI conversation was simple: bigger model, better model, end of story. In 2025 and early 2026, that story started to break. The most useful discussions on Reddit, especially in …

AI’s New Scoreboard: Why Benchmarks Alone No Longer Predict Who Wins

AI’s New Scoreboard: Why Benchmarks Alone No Longer Predict Who Wins If you spend time in AI circles, you see the same argument every week: a new model tops a leaderboard, and people declare a winner. A recent Reddit post in r/artificial pushed back hard on this pattern, arguing that …

The New AI Stack Is a Portfolio, Not a Monolith: What Reddit’s Power Users Get Right About Model Strategy

The New AI Stack Is a Portfolio, Not a Monolith: What Reddit’s Power Users Get Right About Model Strategy For years, AI adoption debates sounded like sports fandom: pick one model, defend it, and call it strategy. That framing is collapsing. A recent Reddit discussion from an operator running thousands …

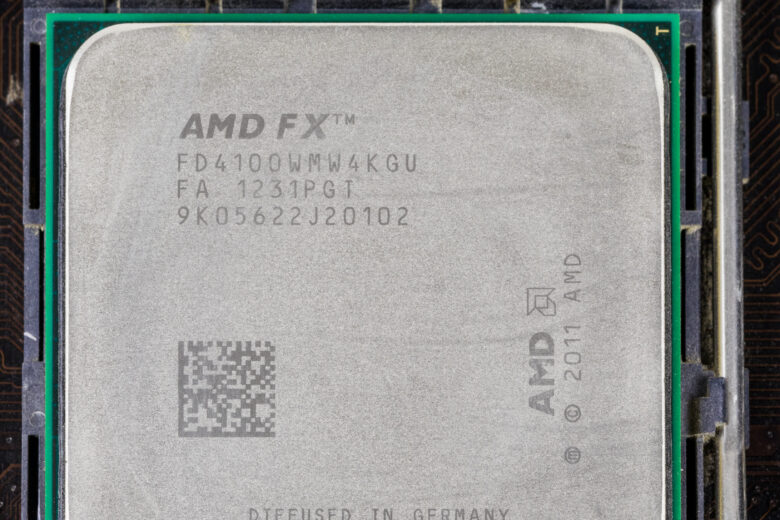

The $600 AI Revolution: How Apple’s Secret Chip Is Changing Local Compute Forever

The $600 AI Revolution: How Apple’s Secret Chip Is Changing Local Compute Forever Thirteen months ago, running a frontier-level language model at usable speeds meant spending $6,000 on hardware. Today, a $600 Mac Mini can run a superior model at the same speed—and that’s just the beginning of what Apple …

The AI Deployment Gap: Why Reddit’s Practitioners Are Moving From Model Hype to Operational Discipline

The AI Deployment Gap: Why Reddit’s Practitioners Are Moving From Model Hype to Operational Discipline The biggest AI story in 2026 is not a model launch. It is a credibility problem. Across Reddit’s technical communities, practitioners keep repeating the same pattern: demos are easy, production is hard, and value is …

The New AI Moat Is Operational: What Reddit’s Practitioners Reveal About Cost, Speed, and Reliability

The New AI Moat Is Operational: What Reddit’s Practitioners Are Teaching Us About Cost, Speed, and Real-World Reliability For a few years, the AI story was simple: bigger model, better model, winner takes all. That story is now incomplete. Across Reddit’s most practical AI communities, the center of gravity has …

The New AI Bottleneck Isn’t Model Intelligence. It’s Deployment Economics.

The New AI Bottleneck Isn’t Model Intelligence. It’s Deployment Economics. If you skim AI headlines, the story sounds simple: bigger models, bigger spending, bigger impact. But Reddit’s technical communities are telling a more complicated story from the ground. In r/LocalLLaMA, r/MachineLearning, r/artificial, and r/technology, the recurring theme is not “who …

The Edge AI Benchmark Mirage: Why the Same INT8 Model Can Collapse Across Real Phones

The Edge AI Benchmark Mirage: Why the Same INT8 Model Can Collapse from 91.8% to 71.2% on Real Phones A Reddit post in r/MachineLearning landed like a small alarm bell for anyone shipping AI on-device: one team ran the same INT8 model, same ONNX export, across five Snapdragon chipsets and …

The AI Benchmark Hangover: What Reddit Is Getting Right About Real-World Deployment in 2026

The AI Benchmark Hangover: What Reddit Is Getting Right About Real-World Deployment in 2026 If you spend enough time in AI circles right now, you’ll hear the same argument in different accents: leaderboards are exciting, but they are no longer enough to make product decisions. Over the last week, threads …

AI ROI in 2026: What Reddit Gets Right (and Wrong) About Productivity, Local Models, and Agent Hype

AI ROI in 2026: What Reddit Gets Right (and Wrong) About Productivity, Local Models, and Agent Hype In the past two months, three Reddit storylines kept colliding: developers saying AI coding tools slow them down, operators sharing surprisingly practical local-model benchmarks, and founders posting “agentic automation” wins that sound either …