Docker has revolutionized the way developers build, ship, and run applications. With its ability to create containers—lightweight, portable environments that package your application and its dependencies—Docker has become a crucial tool in modern software development. However, creating efficient Docker containers requires more than just writing a simple Dockerfile. There are several best practices that, when followed, can help you create containers that are not only functional but also optimized for performance, security, and size.

Creating efficient and optimized containers is key to harnessing the full power of Docker. Whether you’re a developer or a system administrator, understanding Dockerfile best practices will help you create reliable, efficient, and secure container images. In this extensive guide, we’ll cover all the critical tips and techniques that you can use to build better Docker images and avoid common pitfalls. Ready to make your Dockerfile more efficient? Let’s dive in!

Introduction

Docker is an amazing tool for containerizing applications, making deployment easier, and ensuring that your software works consistently regardless of the environment. Dockerfiles are the heart of the Docker process, containing a series of instructions to build your container images. Writing Dockerfiles effectively is an art that requires an understanding of how to make them as lean, secure, and maintainable as possible. Let’s explore how to achieve this!

In the era of microservices and cloud computing, Docker has become an indispensable tool for application development and deployment. Containerization allows developers to package applications and their dependencies into a single, portable unit, ensuring predictability, scalability, and rapid deployment. However, the efficiency of your containers largely depends on how optimally your Dockerfile is written.

In this comprehensive guide, we will delve into a wide array of best practices for writing Dockerfiles that help you build lightweight, fast, and secure containers. We will explore Dockerfile basics, security considerations, and techniques to avoid common pitfalls, as well as tools that help you optimize Docker images for better performance.

Why Use Dockerfiles?

A Dockerfile is essentially the blueprint for your Docker container. It defines all the necessary components, from the base image to configuration, application installation, and runtime specifications. Writing efficient Dockerfiles is crucial to ensure that your containers are optimized for the environment they are running in.

By using Dockerfiles, you benefit from:

- Automation: Dockerfiles automate the process of building images.

- Portability: The instructions within the Dockerfile ensure the application will run the same way, no matter the environment.

- Reproducibility: A well-written Dockerfile ensures consistency across environments, reducing unexpected behavior.

- Scalability: Docker images can be used to scale applications horizontally.

- Security: Dockerfiles help implement security best practices by providing a structured way to define your container environment.

Basic Structure of a Dockerfile

A Dockerfile consists of various instructions, each defining a part of the image:

- FROM: Specifies the base image from which you are building your new image. This is the starting point for all Dockerfiles.

- RUN: Executes commands during the image building process, such as installing packages.

- COPY or ADD: Copies files from your local system to the container filesystem.

- CMD or ENTRYPOINT: Defines the command that will run when a container starts. While CMD is often used for default commands, ENTRYPOINT makes a container behave more like an executable.

- ENV: Sets environment variables.

- EXPOSE: Indicates the ports on which the container will listen for connections at runtime.

- WORKDIR: Sets the working directory for any RUN, CMD, ENTRYPOINT, COPY, or ADD instructions that follow it.

- VOLUME: Creates a mount point with the specified path and marks it as holding externally mounted volumes from the host or other containers.

Understanding these components will help us develop more efficient and optimized Docker images.

Dockerfile Best Practices

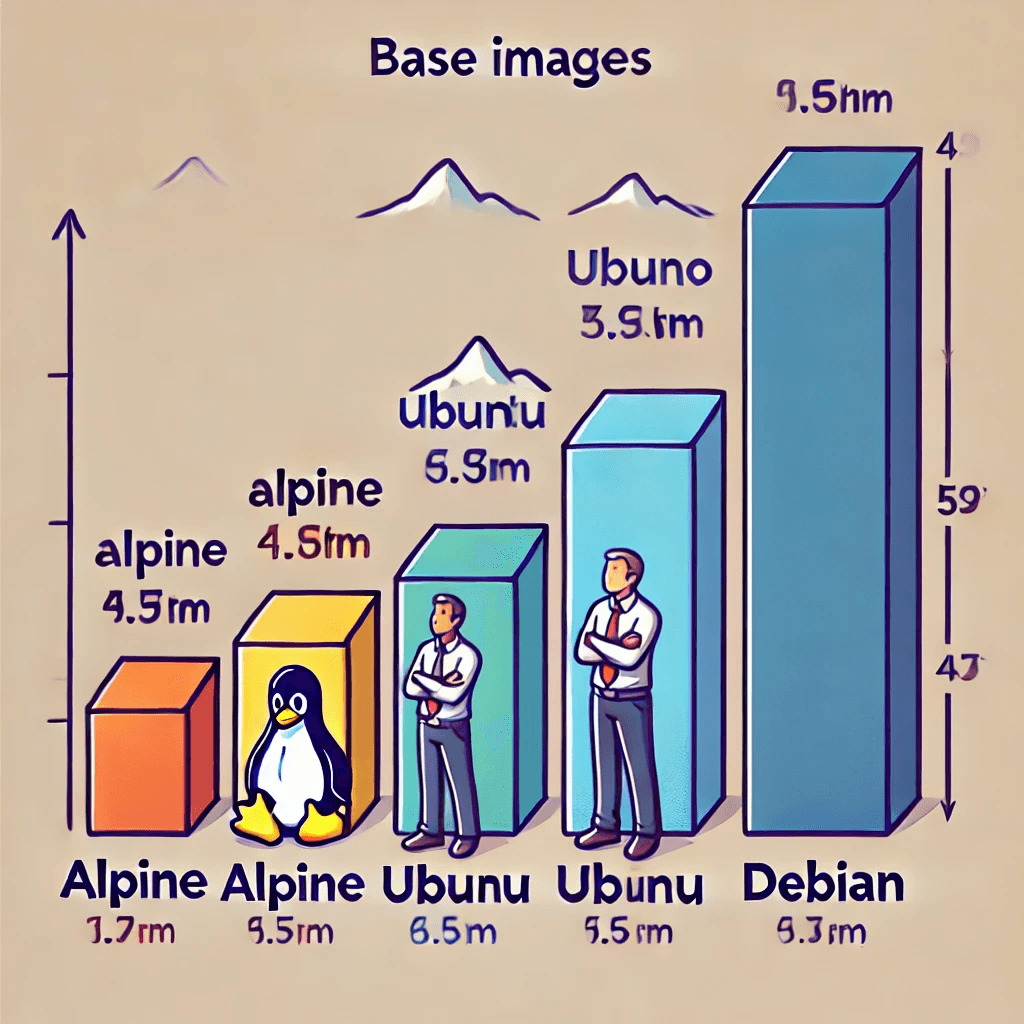

1. Use a Lightweight Base Image

The base image serves as the foundation of your Docker image, and selecting the right one is critical. Using a lightweight base image can significantly reduce the size of your Docker image. A smaller image not only makes deployments faster but also reduces the attack surface, improving security.

Alpine Linux is a popular minimal image around 5 MB in size that is widely used for building efficient containers.

FROM alpine:3.15Pros:

- Small size, which reduces the overall image size.

- Enhanced security due to its minimalistic nature.

- Faster downloads and deployments.

Cons:

- May require additional configuration as it uses

muslinstead ofglibc, which means some packages might behave differently or need adjustments.

Scratch is another base image that is entirely empty, making it ideal for languages like Go or Rust that can compile to static binaries.

FROM scratch

COPY myapp /myapp

CMD ["/myapp"]

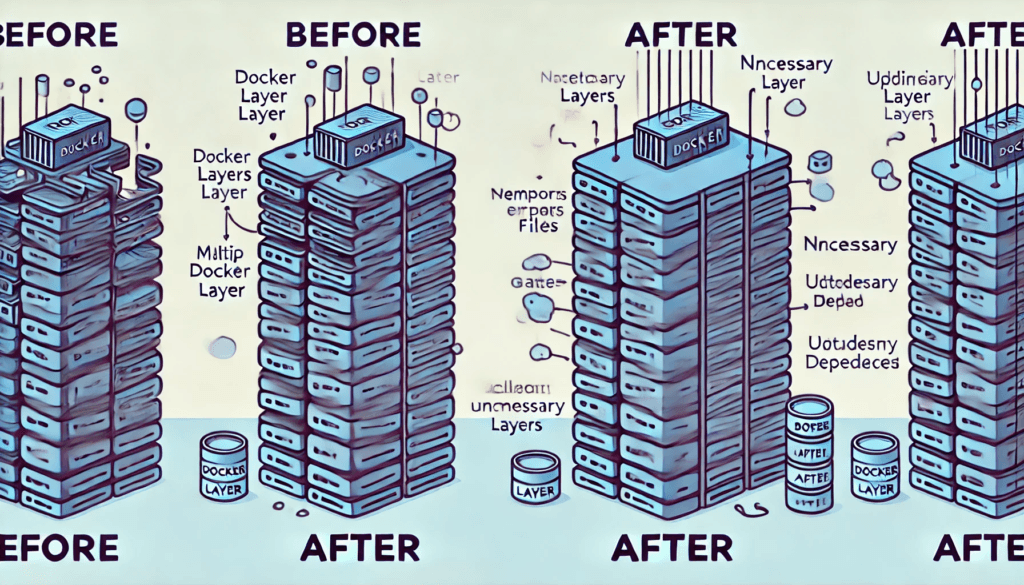

2. Minimize the Number of Layers

Each Docker instruction creates a new layer in the resulting image. Minimizing the number of layers can help reduce image size and complexity.

Combining commands within a single RUN instruction is one of the best ways to achieve this:

RUN apt-get update && apt-get install -y \

curl \

vim && \

apt-get clean && rm -rf /var/lib/apt/lists/*Using a single RUN command helps reduce the number of layers by combining actions, thereby reducing the overall size of the image.

3. Order Instructions Efficiently

Docker employs caching mechanisms to accelerate image builds. Ordering instructions in your Dockerfile efficiently is key to leveraging these caching mechanisms effectively. Place instructions that are less likely to change (like installing dependencies) earlier in the Dockerfile and place frequently modified instructions (like copying application source code) towards the bottom.

Example:

# Install dependencies first

RUN apt-get update && apt-get install -y python3

# Copy the source code after dependencies are installed

COPY . /appThis strategy allows Docker to reuse cached layers whenever possible, significantly speeding up builds.

4. Avoid Using the Latest Tag

Using the latest tag for your base image may seem convenient but can lead to unpredictable builds. The latest tag changes over time, which means that builds may fail or behave unexpectedly when different versions of the base image are pulled.

Instead, use a specific version to ensure consistency and predictability:

# Bad practice

FROM python:latest

# Good practice

FROM python:3.9This approach ensures that your image builds will remain consistent across different environments.

5. Use Multi-Stage Builds

Multi-stage builds are a powerful feature that allows you to use multiple FROM statements in a single Dockerfile, optimizing the size of the final image. This technique is particularly useful for separating the build environment from the runtime environment, which ensures that only the necessary files and dependencies are copied into the final image.

Consider this example for a Go application:

# Build stage

FROM golang:1.18 AS builder

WORKDIR /app

COPY . .

RUN go build -o main .

# Final stage

FROM alpine:latest

WORKDIR /root/

COPY --from=builder /app/main .

CMD ["./main"]With multi-stage builds, you can significantly reduce the final image size by excluding development dependencies and temporary files.

(Image suggestion here: Add an image illustrating how multi-stage builds work, showing multiple stages and their respective outputs)

6. Avoid Hardcoding Secrets

Hardcoding sensitive information such as API keys, passwords, or database credentials within your Dockerfile can lead to severe security risks. Instead, use environment variables or Docker secrets to handle sensitive data.

Best Practices for Managing Secrets:

- Use environment variables to pass secrets at runtime.

- Use Docker Secrets (available in Docker Swarm) or secret management tools like AWS Secrets Manager or HashiCorp Vault.

Example:

# Bad practice

ENV API_KEY=mysecretapikey

# Good practice

ARG API_KEYThis way, secrets are provided at build time, preventing them from being embedded in the image.

7. Set the USER Instruction

By default, Docker containers run as the root user, which is a security risk as it makes the container more vulnerable to attacks. Instead, create a non-root user and use the USER instruction to switch to that user.

RUN adduser -D myuser

USER myuserRunning your application as a non-root user minimizes the risk of privilege escalation in case an attacker gains access to the container.

8. Clean Up After Installing

Whenever you install packages, make sure to clean up unnecessary files within the same RUN command. This practice prevents temporary files from persisting in intermediate layers and inflating the final image size.

RUN apt-get update && apt-get install -y curl && \

apt-get clean && rm -rf /var/lib/apt/lists/*Cleaning caches and temporary files ensures that the Docker image remains as small as possible.

9. Use .dockerignore Files

A .dockerignore file functions similarly to a .gitignore file, ensuring that unnecessary files are excluded from the Docker build context. This helps reduce the amount of data sent to the Docker daemon and ensures that sensitive or irrelevant files are not included in the image.

Example .dockerignore file:

.git

node_modules

Dockerfile

.dockerignoreThis file reduces the build context size, which helps speed up the build process and ensures that the resulting image contains only the necessary files.

10. Employ Dependency Management

When creating Docker images, it’s important to ensure that all dependencies are managed efficiently.

- Pin Versions: Always pin versions of dependencies to ensure that builds are consistent and reproducible. This helps in preventing unexpected changes when dependencies are updated.

- Remove Unnecessary Dependencies: Install only the packages that are required for the application.

Example:

RUN apt-get install -y --no-install-recommends packageThe --no-install-recommends flag ensures that no unnecessary dependencies are installed.

11. Run as a Non-Root User

Running containers as a non-root user is crucial for maintaining security.

- Create a Dedicated User: Use the USER instruction to create a user and run your application as that user.

Example:

RUN useradd -m appuser

USER appuserThis practice ensures that even if an attacker gains access to the container, they have limited privileges.

12. Scan for Vulnerabilities

Security should be a primary concern when building Docker images. Regularly scanning your images for vulnerabilities is crucial to ensure that known security issues are addressed.

Tools for Scanning:

- Trivy: A popular open-source vulnerability scanner for Docker images.

- Clair: A static analysis tool for identifying vulnerabilities.

- Anchore: Provides deep analysis and compliance reporting for Docker images.

Regularly scan your images and update dependencies to keep your images secure.

13. Utilize Metadata and Labels

Adding metadata to your Docker image using LABEL instructions can provide helpful information about the image, such as the author, version, and intended usage.

LABEL maintainer="yourname@example.com" \

version="1.0" \

description="A sample image for demonstrating Dockerfile best practices"Metadata can be useful for managing images, especially in a team environment where multiple developers work on the same project.

14. Optimize Image Size

Optimizing the size of Docker images helps in reducing storage requirements and speeding up deployments.

Best Practices for Reducing Image Size:

- Use minimal base images such as Alpine or Scratch.

- Clean up after installation by removing package caches and temporary files.

- Minimize the number of layers by combining commands using the

&&operator.

Optimization Tools:

- Docker Slim: A tool that automatically optimizes Docker images by analyzing and removing unnecessary components without changing functionality.

15. Leverage Build Tools and Automation

Incorporate tools that help automate and manage your Docker builds, especially in a CI/CD pipeline.

- Docker Compose: Use Docker Compose for multi-container applications, which helps manage the dependency between different services easily.

- Makefiles: Use a Makefile to automate repetitive tasks like building, tagging, and pushing images.

Example Makefile:

build:

docker build -t myapp:latest .

deploy:

docker run -d -p 8080:8080 myapp:latestUsing Makefiles makes it easier to run builds, especially when working in a team.

Examples of Dockerfile Optimization

Let’s walk through an example to see how we can optimize a basic Dockerfile. Consider a Dockerfile that initially looks like this:

FROM ubuntu:latest

RUN apt-get update

RUN apt-get install -y python3

RUN apt-get install -y curl

COPY . /app

CMD ["python3", "/app/app.py"]Optimized version:

FROM ubuntu:latest

RUN apt-get update && apt-get install -y python3 curl && \

apt-get clean && rm -rf /var/lib/apt/lists/*

WORKDIR /app

COPY . .

CMD ["python3", "app.py"]In the optimized version, we:

- Combined

RUNinstructions to minimize layers. - Added cleanup commands to reduce image size.

- Used

WORKDIRto simplifyCOPYandCMDinstructions.

Common Pitfalls to Avoid

- Using

latesttags: Instead of usingFROM ubuntu:latest, use a specific version likeFROM ubuntu:20.04to avoid unpredictability in builds. - Not specifying a

WORKDIR: By using aWORKDIR, you make sure that subsequent commands are executed in the right directory, improving readability and preventing errors. - Ignoring security updates: It’s essential to keep base images updated. Always check for and apply security updates regularly.

Tools for Dockerfile Analysis

There are several tools available that can help you analyze and optimize your Dockerfiles:

- Dockerlint: Checks Dockerfiles for issues and common mistakes.

- Hadolint: A Dockerfile linter that helps enforce best practices.

- Trivy: Scans your Docker images for vulnerabilities.

- Dive: Helps you explore a Docker image layer by layer to understand how to optimize it.

Using these tools can help you maintain high standards for your Docker images, ensuring security and efficiency.

Security Considerations for Dockerfiles

Building secure Docker images requires considering security at every stage of the Dockerfile creation process.

- Use Official Images: Prefer official images from trusted sources, as they are regularly updated and maintained.

- Limit Resource Capabilities: Use the

--cap-dropflag to limit the capabilities available to a container. - Avoid Privileged Mode: Never run a container in privileged mode unless absolutely necessary, as it grants extended privileges to the container, potentially compromising the host system.

Performance Optimization Tips

Optimizing Docker images for performance involves reducing build times, improving runtime efficiency, and ensuring that images are lean and agile.

- Use Cache Strategically: Leverage Docker’s build cache to speed up rebuilds.

- Layer Reuse: Optimize the order of instructions to maximize layer reuse.

- Reduce Context Size: Keep your build context minimal to speed up the image-building process.

Using Docker in CI/CD Pipelines

Docker is a perfect fit for CI/CD pipelines, enabling consistent and reliable deployments across different environments.

- Docker and Jenkins: Use Docker agents for Jenkins pipelines to isolate and manage build environments.

- GitLab CI/CD: Utilize Docker images as part of GitLab CI/CD to test and deploy applications in consistent environments.

- Kubernetes Integration: Use Docker images within Kubernetes for orchestrated deployments.

Integrating Docker into CI/CD pipelines helps automate the entire process of building, testing, and deploying containerized applications, making it an essential tool for DevOps practices.

Conclusion

Writing an efficient Dockerfile requires careful consideration of best practices, such as using lightweight base images, minimizing layers, avoiding hardcoded secrets, leveraging multi-stage builds, and employing security best practices. By incorporating these practices, you can create Docker images that are smaller, faster, and more secure.

Remember, a clean Dockerfile means a clean image — and that translates to reduced costs, improved performance, and better maintainability.

Take the time to explore these techniques, experiment, and continuously refine your Dockerfiles. The more optimized they are, the better your containers will perform.